- AutoGPT Newsletter

- Posts

- Why I don’t use AI model aggregators

Why I don’t use AI model aggregators

Hey, Joey here.

With new models dropping every few weeks, it’s tempting to avoid choosing altogether and just use a platform that gives you everything in one place.

That’s exactly what AI model aggregators are selling: one subscription, every model, no lock-in.

I’ve tried them. I don’t use them anymore.

Here’s what I’ve got for you today:

📌 Resource: OpenAI’s data on who’s getting real value from AI

📌 Resource: Why enterprise AI is scaling faster than any software category

📌 Deep Dive: Why I don’t use AI model aggregators — and why the tradeoffs compound over time

Let’s dive in 👇

WEEKLY PICKS

🗞️ Quick Reads:

An Empirical 100 Trillion Token Study with OpenRouter (Open Router)

OpenAI Just Revealed Who's Actually Winning at AI (OpenAI SOTA Report)

Entreprise AI is the fastest scaling software category in history (Menlo)

DEEP DIVE

Why I don’t use AI model aggregators

People love the idea of an “everything app.”

For example, one place to read your email, check your DMs, skim Slack, and pretend you’re “on top of things.”

In practice, almost none of these unified hubs worked outside of China.

Now, AI model aggregators are trying to do the same for LLMs.

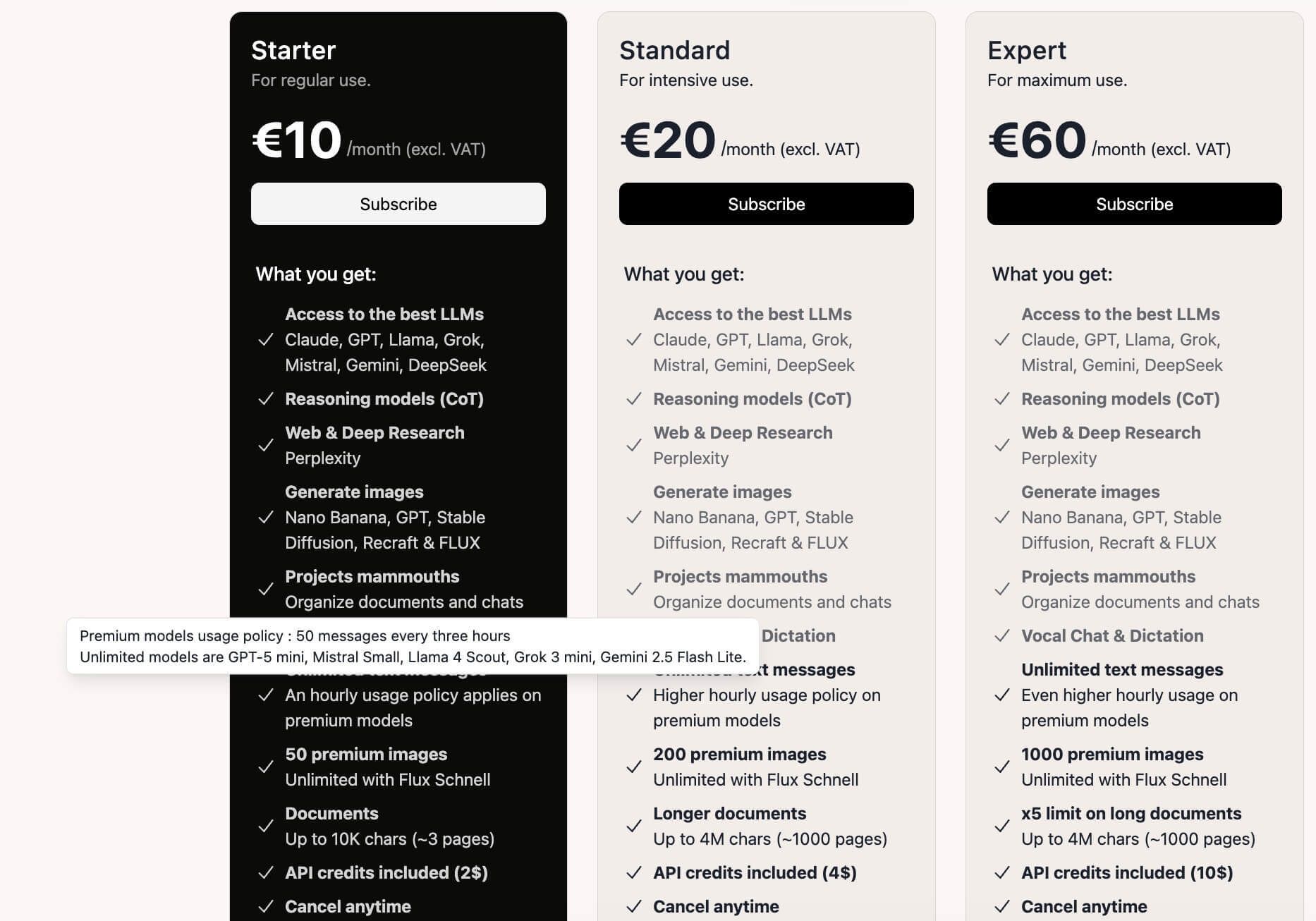

These platforms (Poe, Mammoth, You.com,etc.) promise one subscription to use GPT-5, Claude, Gemini, Grok, Llama, or any model really.

Roughly $10/month to access models that cost $20 each individually.

It sounds like a smart deal.

But I don’t use them, and here is why.

Long-term memory & context actually matter

Here’s a confession: I hate prompt engineering.

The moment I’m writing a 600-word prompt to “unlock the model’s full potential,” I stop feeling productive.

I feel like I’m writing documentation for a robot who should already understand me.

The good news is that over the last six to eight months, model quality improved to a point where long prompts feel unnecessary.

And that happened because OpenAI, Anthropic, and others added two major features:

Persistent long-term memory

Thread-level context that compounds over time

This is now the single biggest reason I stick with one LLM platform instead of hopping around.

Aggregator platforms try to replicate this with “Projects” or cloud-saved context, but those systems don’t behave like built-in memory.

They run through the model’s API layer, which means:

No native long-term memory

No deep recall between threads

No evolving personalization

No cross-session grounding

The API can pass context you give it. It cannot access or reconstruct the internal memory systems ChatGPT or Claude use within their own apps.

And yes, this is a hard limitation today. Claude, ChatGPT, Gemini, etc. do not expose native memory through their APIs, so aggregators cannot “fake” it.

This alone is worth the extra $10–$15/month to me.

Feature parity gaps that compound over time

Aggregators move fast, but the model labs ship features that can’t be recreated.

Let’s take web search, for example.

LLM APIs cannot perform a full web search on their own.

They can retrieve URLs you send them but they cannot initiate a real-time search engine query.

This is why ChatGPT and Gemini handle search natively: they integrate with their own partner search infrastructure.

Model hubs can’t access that. So what do they do?

They bolt on their own custom search workflows. Usually a blend of:

Bing API or a cheaper search API

A scraper layer

Their own reranking

A simple retrieval step before the LLM call

It works. But it’s not the same as the proprietary search integrations inside ChatGPT or Gemini, which operate closer to the metal and use more complex ranking + retrieval pipelines.

In plain English:

Search quality inside aggregators is almost always weaker and less consistent than first-party search.

And search is only one example.

Aggregators also can’t match:

Deep Search

Canvas

Agent Mode

Meeting summaries

File workspaces

Built-in tools (vision, voice, interactive UI features)

None of these ship through the standard API.

And the gap compounds every month because each lab keeps adding app-only features.

You can ignore this today, but the tradeoffs get bigger over time.

Long-term platform risk

Let’s talk about business models.

OpenAI has ~800M weekly active users.

Only ~5% pay.

Even then, their consumer business is not profitable.

Now compare that to AI aggregators.

An aggregator charges $10/month and then pays for:

Every API call

Every heavy query

Every hallucinated multi-step chain that costs 10× more than it should

Every user who treats it like an unlimited buffet

API costs did drop over the last year, but usage climbed.

Most aggregators hide behind “unlimited messages” and then add hourly limits to premium models. That’s the same economics as a gym membership:

Just like gyms: they make money from people who don’t show up.

The moment users start prompting more, the model cost rises above the subscription price.

If that happens across a meaningful slice of their user base, the economics break.

And when your entire workflow (search, chats, projects, memory) sits inside a middleman platform, the risk becomes obvious:

If the aggregator folds, you lose everything.

So I stick to one LLM platform, and pay the extra $10/month, because it buys me reliability, context, and features that aggregators simply can’t deliver.

THAT‘S A WRAP

Before you go: Here’s how I can help

1) Sponsor Us — Reach 250,000+ AI enthusiasts, developers, and entrepreneurs monthly. Let’s collaborate →

2) The AI Content Machine Challenge — Join our 28-Day Generative AI Mastery Course. Master ChatGPT, Midjourney, and more with 5-minute daily challenges. Start creating 10x faster →

See you next week,

— Joey Mazars, Online Education & AI Expert 🥐

PS: Forward this to a friend who’s curious about AI. They’ll thank you (and so will I).

What'd you think of today's email? |

Reply